Rauthy

Rauthy - OpenID Connect Single Sign-On Identity & Access Management

This application received an independent security audit from

Radically Open Security

(Frank Plattel and Morgan Hill) as part

of the NGI Zero Core funding. There were some findings that were addressed

in v0.32.1. The full report can be found

here.

What it is

Rauthy is a lightweight and easy to use Identity Provider supporting OpenID Connect, OAuth 2.0 and

PAM. It aims to be simple to both set up and operate, with very secure defaults and lots of config

options, if you need the flexibility. It puts heavy emphasis on Passkeys and a very strong security

in general. The project is written in Rust to be as memory efficient, secure and fast as possible,

and it can run on basically any hardware. If you need Single Sign-On support for IoT or headless CLI

tools, it's got you covered as well.

You get High-Availability, client branding, UI translation, a nice Admin UI, Events and Auditing,

and many more features. By default, it runs on top of Hiqlite

and does not depend on an external database (Postgres as an alternative) to make it even simpler to

operate, while scaling up to millions of users easily.

Secure by default

It tries to be as secure as possible by default while still providing all the options needed to be

compatible with older systems. For instance, if you create a new OIDC client, it activates ed25519

as the default algorithm for token signing and S256 PKCE flow. This will not work with clients,

which do not support it, but you can of course deactivate this to your liking.

MFA and Passwordless Login

Option 1:

Password + Security Key (without User Verification):

Rauthy provides FIDO 2 / Webauthn login flows. If you once logged in on a new client with your

username + password, you will get an encrypted cookie which will allow you to log in without a

password from that moment on. You only need to have a FIDO compliant Passkey being registered for

your account.

Option 2:

Passkey-Only Accounts:

Rauthy supports Passkey-Only-Accounts: you basically just provide your E-Mail address and log in

with your FIDO 2 Passkey. Your account will not even have / need a password. This login flow is

restricted though to only those passkeys, that can provide User Verification (UV) to always have at

least 2FA security.

Discoverable credentials are discouraged with Rauthy (for good reason). This means you will need to enter your E-Mail for the login (which will be autofilled after the first one), but Rauthy passkeys do not use any storage on your device. For instance when you have a Yubikey which can store 25 passkeys, it will not use a single slot there even having full support.

Fast and efficient

The main goal was to provide an SSO solution like Keycloak and others while using a way lower footprint and being more efficient with resources. For instance, Rauthy can easily run a fully blown SSO provider on just a Raspberry Pi. It makes extensive use of caching for everything used in the authentication chain to be as fast as possible. Most things are even cached for several hours and special care has been taken into account in case of cache eviction and invalidation.

Rauthy comes with two database options:

- with embedded Hiqlite, which is the default setting

- or you can optionally use a Postgres as your database, if you already have an instance running anyway.

The resource usage depends a lot on your setup (Hiqlite, Postgres, HA deployment, amount of users, ...), but for a small set of users, it is usually below 100mb of memory even with the very aggressive, in-memory caching Rauthy uses, and in some cases even below 50mb.

Highly Available

Even though it makes extensive use of caching, you can run it in HA mode. Hiqlite creates its own embedded HA cache and persistence layer. Such a deployment is possible with both Hiqlite and Postgres.

Admin UI + User Account Dashboard

Rauthy does have an Admin UI which can be used to basically do almost any operation you might need to administrate the whole application and its users. There is also an account dashboard for each individual user, where users will get a basic overview over their account and can self-manage som values, password, passkeys, and so on.

Client Branding

You have a simple way to create a branding or stylized look for the Login page for each client. The

whole color theme can be changed and each client can have its own custom logo. Additionally, if you

modify the branding for the default rauthy client, it will not only change the look for the Login

page, but also for the Account and Admin page.

Events and Auditing

Rauthy comes with an Event- and Alerting-System. Events are generated in all kinds of scenarios. They can be sent via E-Mail, Matrix or Slack, depending on the severity and the configured level. You will see them in the Admin UI in real-time, or you can subscribe to the events stream and externally handle them depending on your own business logic.

Brute-Force and basic DoS protection

Rauthy has brute-force and basic DoS protection for the login endpoint. The timeout will be artificially delayed after enough invalid logins. It auto-blacklists IPs that exceeded too many invalid logins, with automatic expiry of the blacklisting. You can, if you like, manually blacklist certain IPs as well via the Admin UI.

IoT Ready

With the possibility to run on devices with very limited resources and having compatibility for the

OAuth Device Authorization Grant device_code flow, Rauthy would be a very good choice for IoT

projects. The IdP itself can easily run on a Raspberry Pi and all headless devices can be

authenticated via the device_code flow. The rauthy-client has everything built-in and ready, if

you want to use Rust on the IoT devices as well. It has not been checked in a no_std environment

yet, but the client implementation is pretty simple.

PAM Logins

OIDC / OAuth covers almost all web apps, and for those that don't have any support, Rauthy comes

with forward_auth support. To not need an additional LDAP / AD / something similar for your

backend and workstations, Rauthy comes with its own custom PAM module. It does not just use JWT

Tokens for logging in, but you can actually manage all your Linux hosts, groups and users in

different ways. You have the option to secure local logins to workstations via Yubikey (only USB

Passkeys supported, no QR-code / software keys), and all SSH logins can be done with ephemeral,

auto-expiring passwords, that you can generate via your Account dashboard, if an Admin has created a

PAM user for you. This means you basically have MFA-secured SSH logins without the need for any

modifications or additional software on your local SSH client, and you can use any SSH client from

any machine securely, even if it's not your own.

In addition to the PAM module, you get an NSS module and an NSS proxy that runs on each machine. You can dynamically log in to any machine an Admin has given you access to. Users and groups are not added to local files, but will be resolved via the network.

This module is published in a separate repo to avoid licensing issues, since it relies on some GPLv3 dependencies. You can take a look at it here: rauthy-pam-nss.

Scales to millions of users

Rauthy has no issue handling even millions of users. Everything keeps being fast and responsive,

apart from the search function for users in the Admin UI when you reach the 10+ million users, where

searching usually takes ~3 seconds (depending on your server of course).

The only limiting factor at that point will be your configuration and needs for password hashing

security. It really depends on how many resources you want to use for hashing (more resources ==

more secure) and how many concurrent logins at the exact same time you need to support.

Features List

- Fully working OIDC / OAuth 2.0 provider

- PAM logins via custom PAM + NSS modules

- Hiqlite or Postgres as database

- Fast and efficient with low footprint

- Secure default values

- Highly configurable

- High-Availability

- True passwordless accounts with E-Mail + Magic Link + Passkey

- Dedicated Admin UI

- Account dashboard UI for each user with self-service

- OpenID Connect Dynamic Client Registration

- OpenID Connect RP Initiated Logout

- OpenID Connect Backchannel Logout

- OAuth 2.0 Device Authorization Grant flow

- Upstream Authentication Providers (Login with ...)

- DPoP tokens for decentralized login flows

- Ephemeral, dynamic clients for decentralized login flows

- SCIM v2 for downstream clients

- All End-User facing sites support i18n server-side translation with the possibility to add more languages

- Simple per client branding for the login page

- Custom roles

- Custom groups

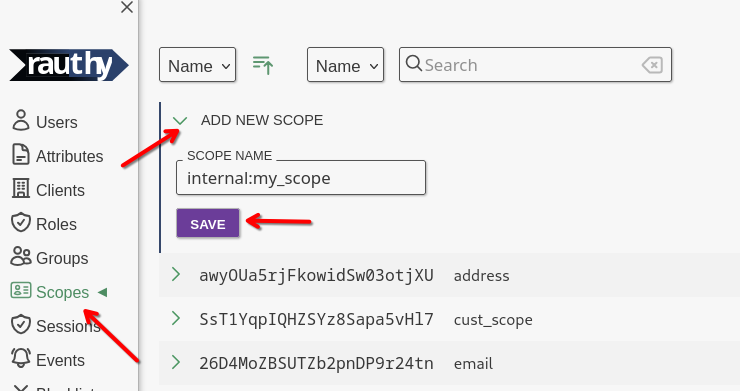

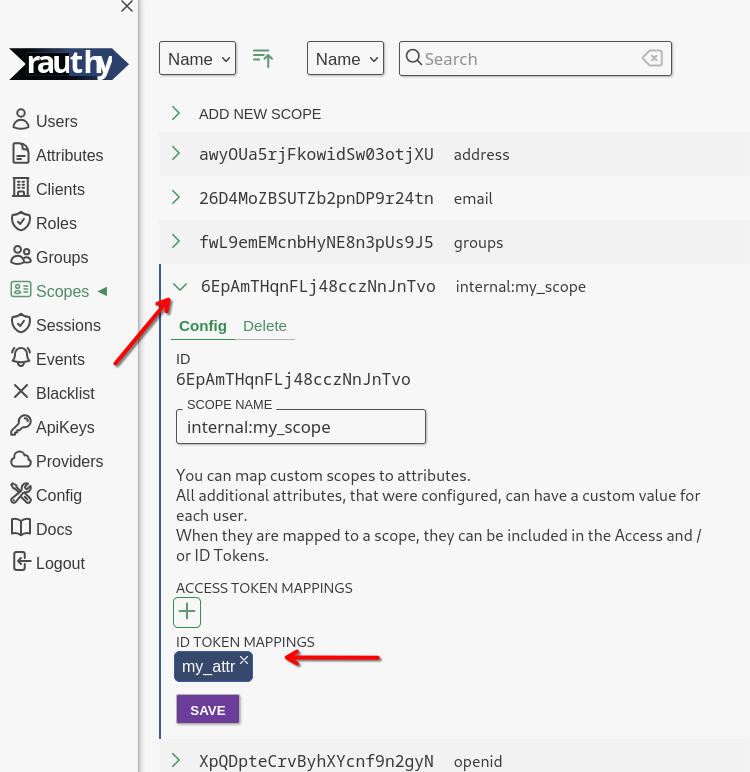

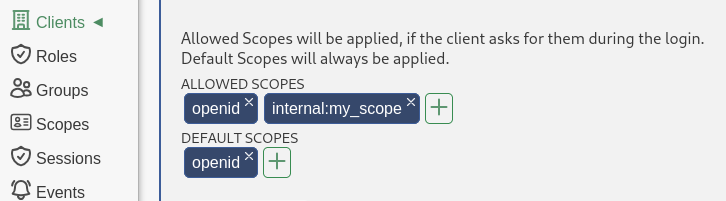

- Custom scopes

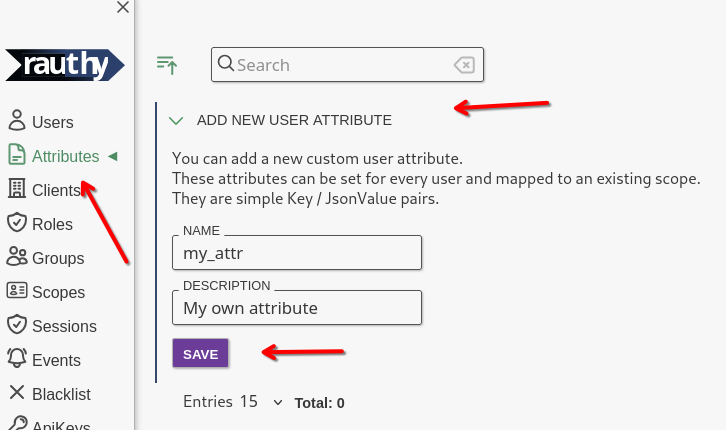

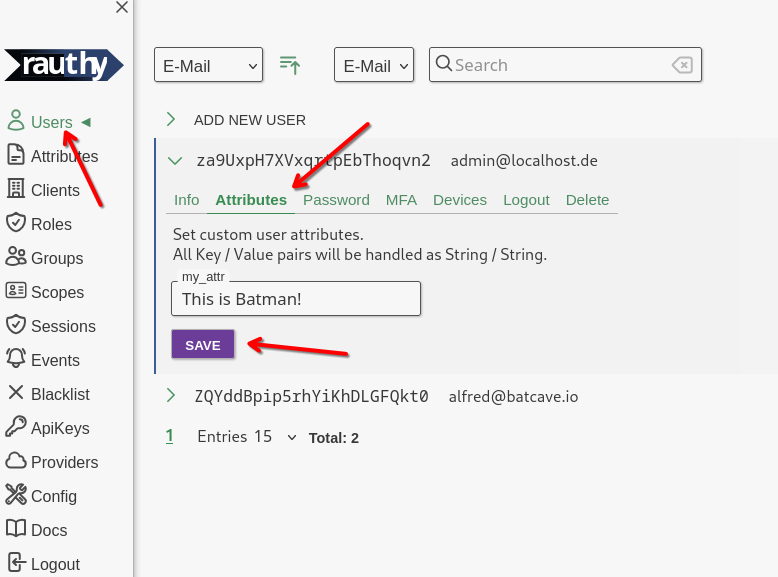

- Custom user attributes

- User attribute binding to custom scopes

- Optional user-editable custom attributes

- Configurable password policy

- Admin API Keys with fine-grained access rights

- Events and alerting system

- Optional event persistence

-

Dedicated

forward_authendpoint, in addition to the existing userinfo, with support for configurable trusted auth headers - Optional event notifications via: E-Mail, Matrix, Slack

- Optional Force MFA for the Admin UI

- Optional Force MFA for each individual client

- Additional encryption inside the database for the most critical entries

- Automatic database backups with configurable retention and auto-cleanup (Hiqlite only)

- auto-encrypted backups (Hiqlite only)

- Ability to push Hiqlite backups to S3 storage

- auto-restore Hiqlite backups from file or s3

- Username enumeration prevention

- Login / Password hashing rate limiting

- Session client peer IP binding

- IP blacklisting feature

- Auto-IP blacklisting for login endpoints

- Argon2ID with config helper UI utility

- Housekeeping schedulers and cron jobs

- JSON Web Key Set (JWKS) autorotation feature

- Account conversions between traditional password and Passkey only

- Optional open user registration

- Optional user registration domain restriction

- App version update checker

- SwaggerUI documentation

- Configurable E-Mail templates for NewPassword + ResetPassword events

-

Prometheus

/metricsendpoint on separate port - No-Setup migrations between different databases (Yes, even between Hiqlite and Postgres)

- Hot-Reload TLS certificates without restart

-

Can serve a basic

webiddocument - Experimental FedCM support

Getting Started

Choose A Database

Rauthy's default database is Hiqlite. Under the hood, it's using SQLite, but it adds a additional layer on top making it highly-available using the Raft Consensus Algorithm. Don't let the SQLite engine under the hood fool you, it will handle most probably anything you throw at it, as long as your disks are fast enough. Hiqlite can easily saturate a 1GBit/s network connection with just database (write) traffic. All reads are local, which means they are way faster than with Postgres in any scenario.

If you already have a Postgres up an running with everything set up anyway, you might want to choose it as your main DB, but I do not recommend deploying a Postgres instance just for Rauthy. This would be a waste of precious resources.

If you want to migrate between databases at a later point, you can do this at any time very easily.

Just take a look at the Reference Config and the variable MIGRATE_DB_FROM.

Container Images

Rauthy versions before 0.27.0 had different container images depending on the database you choose.

However, this is not the case anymore. There is only a single image which works with any

configuration. It works on x86_64 and arm64 architectures.

At the time of writing, you can run Rauthy either with Docker or

inside Kubernetes.

Both Getting Started guides do not cover all set up you might want to do for going into

production. Especially the Docker guide is more for testing. \

You should take a look at the Reference Config to see, what you might want

to configure additionally. A dedicated Going into production guide will be written in the future.

Docker

Testing / Local Evaluation

For getting a first look at Rauthy, you can start it with docker (or any other container runtime) on your localhost. The image contains a basic default config which is sufficient for local testing ( don't use it in production, it contains hardcoded secrets).

docker run -it --rm -e LOCAL_TEST=true -p 8443:8443 ghcr.io/sebadob/rauthy:0.34.3

To proceed, go to First Start, or do the production setup below to have persistence.

Testing / Evaluation with E-Mail

You can do anything you like with the default admin@localhost account. Rauthy does not

have any special accounts. It is an account like any other. The only reason it is a Rauthy admin, is

because it is assigned to the rauthy_admin role.

If you like to test creating new accounts or password reset flows though, you need to have at least

a minimal setup that is able to send E-Mails. The easiest way (works on localhost only) is the below

docker-compose.yaml:

services:

mailcrab:

image: marlonb/mailcrab:latest

ports:

- "1080:1080"

rauthy:

image: ghcr.io/sebadob/rauthy:0.34.3

environment:

- LOCAL_TEST=true

- SMTP_URL=mailcrab

- SMTP_PORT=1025

- SMTP_DANGER_INSECURE=true

volumes:

- data:/app/data

ports:

- "8443:8443"

depends_on:

- mailcrab

volumes:

data:

You can create a .env file with COMPOSE_PROJECT_NAME=myapp for custom container/volume name

prefix.

Save this into docker-compose.yaml and start with:

docker compose up -d

You then need the logs output from Rauthy to read the random password for the admin@localhost

account:

docker compose logs -f rauthy

Any outgoing E-Mail will be caught by mailcrab, which can be accessed

via http://localhost:1080 .

When you are done testing, shut down with

docker compose down

Production Setup

For going to production or to test more in-depth, you need to create a config that matches your environment.

The first thing you want to do is to add a volume mount for the database. The second thing is to provide a more appropriate config.

Rauthy expects at least the very basics in a config file. Most other values could be set via ENV

vars. It parses both, the config first and any env var that is set will overwrite a possibly

existing one from the config. You can add environment variables to the startup command with the -e

option.

The following commands will work on Linux and Mac OS (even though not tested on Mac OS). Since I am no Windows user myself, I cannot provide tested commands in this case.

1. We want to create a new directory for Rauthy's persistent data

mkdir -p rauthy/data

2. Add the new config file.

This documentation is in an early version and remote links are not available yet, they will be added at a later point. For now, create a new file and paste the reference config

vim rauthy/config.toml

3. Access rights for the Database files

The Rauthy container by default runs everything with user:group 10001:10001 for security

reasons.

To make this work with the default values, you have 2 options:

- Change the access rights:

sudo chmod 0600 rauthy/config.toml

sudo chmod 0700 rauthy/data

sudo chown -R 10001:10001 rauthy

- The other solution, if you do not have sudo rights, would be to change the owner of the whole directory.

chmod a+w rauthy/data

This will make the directory writeable for everyone, so Rauthy can create the database files inside

the container with 10001:10001 again.

The safest approach would be to change the owner and group for these files on the host system. This

needs sudo to edit the config, which may be a bit annoying, but at the same time it makes sure,

that you can only read the secrets inside it with sudo too.

You should avoid making Rauthy's data world-accessible at all cost. Hiqlite will take care of this automatically during sub-directory creation, but the config includes sensitive information.

4. Adopt the config to your liking.

Take a look at the reference config and adopt everything to your needs, but

to not break this example, be sure to not change cluster.data_dir.

For an in-depth guide on a production ready config, check the Production Config section.

A mandatory step will be to generate proper encryption keys. Take a look at Encryption.

5. Start the container with volume mounts

docker run -d \

-v $(pwd)/rauthy/config.toml:/app/config.toml \

-v $(pwd)/rauthy/data:/app/data \

-p 8443:8443 \

--name rauthy \

ghcr.io/sebadob/rauthy:0.34.3

-v $(pwd)/rauthy/config.toml:/app/config.tomlmounts the config in the correct place-v $(pwd)/rauthy/data:/app/datamounts the volume for Hiqlite-p 8443:8443needs to match your configuredserver.port_httporserver.port_httpsof course. If you use a reverse proxy inside a docker network, you don't need to expose any port, but you need to make sure to setserver.scheme = "http",server.proxy_mode = trueand the correct value forserver.trusted_proxies.

6. You can now proceed with the First Start steps.

Kubernetes

At the time of writing, there is no Helm Chart or Kustomize files available yet. The whole setup is pretty simple on purpose though, so it should not be a big deal to get it running inside Kubernetes.

Single Instance

Since Rauthy uses pretty aggressive caching for different reasons, you cannot just have a single deployment and scale up the replicas without a proper HA setup. How to deploy a HA version is described below.

The steps to deploy on Kubernetes are pretty simple.

- Create namespace

- Create and apply the config

- Create and apply the stateful set

Create Namespace

For the purpose of this documentation, we assume that Rauthy will be deployed in the rauthy

namespace.

If this is not the case for you, change the following commands accordingly.

kubectl create ns rauthy

Create and apply the config

This documentation will manage the Kubernetes files in a folder called rauthy.

mkdir rauthy && cd rauthy

Create the config file, paste the Reference Config and adjust it to your

needs. We are putting the complete config in a K8s secret. Rauthy's config contains quite a few

different secret values and it's just a lot simpler to maintain everything in a single secret, than

splitting it into a ConfigMap and overwrite each secret manually.

apiVersion: v1

kind: Secret

metadata:

name: rauthy-config

namespace: rauthy

type: Opaque

stringData:

config.toml: |

PASTE CONFIG HERE - WATCH THE INDENTATION'

Open the config with your favorite editor and paste the Reference Config in

place.

Make sure to watch the indentation.

I recommend to just always set cluster.node_id_from = "k8s" when deploying a StatefulSet. This

will parse the Raft NodeID automatically from the K8s Pod / Hostname and you don't have to worry

about the node_id. For instance, a Pod named rauthy-0 will be translated to node_id = 1

automatically.

There are some values that you need to generate on your own. These are:

cluster.secret_raft+cluster.secret_apiencryption.keys+encryption.key_active

The secrets for the cluster can be just some long random alphanumeric values. They are used for

authentication for the Hiqlite Raft + API layer. The encryption keys must be generated. More

detailed explanation is in the Encryption section. The tl;dr is:

echo "$(openssl rand -hex 4)/$(openssl rand -base64 32)"

Copy the output and add it to keys. The key_active will be the first part of the output until the

first /. For instance:

[encryption]

keys = ["XLCcaQ/f2xmq/nxVFgJN0CN311miyvVlBxXOQISyw1nPEPOqiI="]

key_active = "XLCcaQ"

You can generate safe values for both secret_raft and secret_api in many ways. You can just

provide a random alphanumeric value, which for instance:

cat /dev/urandom | tr -dc 'a-zA-Z0-9' | head -c48

or you can use the above openssl command again, even though Hiqlite does not need or utilize

base64:

openssl rand -base64 48

If you plan on using S3 for backups, paste the proper values into cluster.s3_* values.

It seems that in some environments, the above openssl command does not output proper values, which

will make Rauthy panic on startup, when it checks the given values. If you run into that situation,

you can generate them without openssl as well, with e.g:

echo "$(cat /dev/urandom | tr -dc 'a-zA-Z0-9' | head -c 6)/$(cat /dev/urandom | head -c 32 | base64)"

Create and apply the stateful set

touch sts.yaml

Paste the following content into the sts.yaml file:

apiVersion: v1

kind: Service

metadata:

name: rauthy

namespace: rauthy

spec:

selector:

app: rauthy

ports:

# chose whatever fits your needs here, you usually only need either http or https

- name: http

port: 8080

targetPort: 8080

- name: https

port: 8443

targetPort: 8443

---

# The headless service is used for the Raft Cluster setup, so Nodes

# can connect to each other without any load balancer in between.

apiVersion: v1

kind: Service

metadata:

name: rauthy-headless

namespace: rauthy

spec:

type: ClusterIP

clusterIP: None

# Make sure to only publish them on the headless service

# and NOT the one you are using via your reverse proxy!

publishNotReadyAddresses: true

sessionAffinity: None

selector:

app: rauthy

ports:

- name: hiqlite-raft

protocol: TCP

port: 8100

targetPort: 8100

- name: hiqlite-api

protocol: TCP

port: 8200

targetPort: 8200

---

# The PDB is only necessary for a HA deployment. You can take it out for a single instance.

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: rauthy

namespace: rauthy

spec:

maxUnavailable: 1

selector:

matchLabels:

app: rauthy

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: rauthy

namespace: rauthy

labels:

app: rauthy

spec:

serviceName: rauthy-headless

# If you start a fresh cluster without a bootstrapped Admin password, it is

# highly suggested to start a single replica for the first setup + login.

# It will work with 3 replicas directly, but if you are not quick enough and

# your logs buffer size is small, you might miss the auto-generated password

# in Pod `rauthy-0` because of many logs.

replicas: 1

selector:

matchLabels:

app: rauthy

template:

metadata:

labels:

app: rauthy

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rauthy

topologyKey: "kubernetes.io/hostname"

securityContext:

fsGroup: 10001

containers:

- name: rauthy

image: ghcr.io/sebadob/rauthy:0.34.3

securityContext:

capabilities:

drop:

- ALL

# User ID 10001 is actually built into the container

# at the creation for better security

runAsUser: 10001

runAsGroup: 10001

runAsNonRoot: true

allowPrivilegeEscalation: false

ports:

# Hiqlite internal ports

- containerPort: 8100

- containerPort: 8200

# You may need to adjust this, if you decide to start

# in https only mode or use another port

- containerPort: 8080

- containerPort: 8443

volumeMounts:

- name: rauthy-data

mountPath: /app/data

readOnly: false

- name: rauthy-config

mountPath: /app/config.toml

readOnly: true

subPath: config.toml

readinessProbe:

httpGet:

scheme: HTTP

# Hiqlite API port

port: 8200

path: /ready

initialDelaySeconds: 5

# Do NOT increase this period, because otherwise K8s may not catch

# a shutting down pod fast enough and may keep routing requests to

# it while is will be unable to handle them properly because of

# the shutdown.

periodSeconds: 3

# We may get a single failure during leader switches

failureThreshold: 2

livenessProbe:

httpGet:

scheme: HTTP

# Rauthy API port

port: 8080

path: /auth/v1/health

initialDelaySeconds: 60

periodSeconds: 30

# We may get a single failure during leader switches

failureThreshold: 2

resources:

requests:

# Tune the memory requests value carefully. Make sure, that the

# pods request at least:

# `ARGON2_M_COST` / 1024 * `MAX_HASH_THREADS` Mi + idle memory

# The actual usage also heavily depends on the Memory Allocator

# tuning. You can find more information in the Tuning section

# in this book.

#

# A HA instance with Hiqlite enabled and without additional memory

# tuning will usually settle ~100mb idle memory being used.

# If you use an external Postgres, idle memory can go as low as

# ~30mb.

memory: 64Mi

# The CPU needs to be adjusted during runtime. This heavily

# depends on your use case.

cpu: 100m

limits:

# Be careful with the memory limit. You must make sure, that the

# (very costly) password hashing has enough memory available. If not,

# the application will crash. You do not really need a memory limit,

# since Rust is not a garbage collected language. Better take a close

# look at what the container actually needs during

# prime time and set the requested resources above properly.

#memory:

# A CPU limit may make sense in case of DDoS attacks or something

# like this, if you do not have external rate limiting or other

# mechanisms. Otherwise, `MAX_HASH_THREADS` is the main mechanism

# to limit resources.

#cpu: 1000m

volumes:

- name: rauthy-config

secret:

secretName: rauthy-config

volumeClaimTemplates:

- metadata:

name: rauthy-data

spec:

accessModes:

- "ReadWriteOnce"

resources:

requests:

storage: 128Mi

#storageClassName: provideIfNeeded

Ingress

This example assumes, that the deployment will run behind a Kubernetes ingress resource of your choice.

It uses Traefik with the IngressRoute CRD.

Nevertheless, the ingress is really simple, and it should be very easy to adopt anything else.

Create the ingress.yaml

touch ingress.yaml

Paste the following content into the ingress.yaml file and adjust to your needs

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: rauthy-https

namespace: rauthy

spec:

entryPoints:

- websecure

routes:

- match: Host(`auth.example.com`)

kind: Rule

services:

- name: rauthy

port: 8080

Deploy

We are now ready to deploy:

kubectl apply -f .

And then to observe the deployment:

kubectl -n rauthy get pod -w

You can now proceed with the First Start steps.

Production Setup

Config

Going to production does not need too many additional steps.

TLS Certificates

The thing you need will be valid TLS certificates, of course. To get these, there are a lot of existing mechanisms. If you use an internal Certificate Authority (CA), you do have you own tools to work with this anyway. If, however, you want to use something like Let's Encrypt, I suggest to use the cert-manager, which is easy and straight forward to use.

An example, how to add a certificate for the Traefik IngressRoute from above:

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: rauthy-https

namespace: rauthy

spec:

entryPoints:

- websecure

tls:

# Paste the name of the TLS secret here

secretName: secret-name-of-your-tls-certificate

routes:

- match: Host(`auth.example.com`)

kind: Rule

services:

- name: rauthy

port: 8080

You may want to add an HTTPS redirect as well:

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: https-only

namespace: rauthy

spec:

redirectScheme:

scheme: https

permanent: true

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: rauthy-http

namespace: rauthy

spec:

entryPoints:

- web

routes:

- match: Host(`auth.example.com`)

kind: Rule

middlewares:

- name: https-only

services:

- name: rauthy

port: 8080

Hiqlite Internal TLS

You can of course also provide TLS certificates for the Hiqlite internal communication. Two independent networks are created: one for the Raft-Internal network traffic like heartbeats and data replication, and a second one for the "external" Hiqlite API. This is used by other Hiqlite cluster members for management purposes and to execute things like consistent queries on the leader node.

You can provide TLS certificates for both of them independently via the following config variables:

[cluster]

# If given, these keys / certificates will be used to establish

# TLS connections between nodes.

#

# values are optional, overwritten by: HQL_TLS_{RAFT|API}_{KEY|CERT}

# overwritten by: HQL_TLS_RAFT_KEY

tls_raft_key = "tls/tls.key"

# overwritten by: HQL_TLS_RAFT_CERT

tls_raft_cert = "tls/tls.crt"

tls_raft_danger_tls_no_verify = true

# overwritten by: HQL_TLS_API_KEY

tls_api_key = "tls/tls.key"

# overwritten by: HQL_TLS_RAFT_KEY

tls_api_cert = "tls/tls.crt"

tls_api_danger_tls_no_verify = true

Additional steps

There are a few more things to do when going into production, but these are the same for Kubernetes and Docker and will be explained in later chapters.

You can now proceed with the First Start steps.

First Start

Initial admin password and login

With the very first start of rauthy, or better with an empty database, when rauthy is starting, it does not only create all the necessary schemas and initial data, but also some sensitive information will be generated safely. This includes a set of Json Web Keys (JWKS) for the token signing and some secrets.

The most important of these newly generated secrets is the default admin user's password.

When this is securely generated with the very first start, it will be logged into the console. This

will only happen once and never again.

Logs with docker

docker logs -f rauthy

Logs with Kubernetes

kubectl -n rauthy logs -f rauthy-0

If you do a Kubernetes HA deployment directly, only the Pod rauthy-0 will log the initial password.

If you missed this log entry, you will not be able to log in.

If this is the case, you can delete the database / volume and just restart rauthy.

The log message contains a link to the accounts page, where you then should log in to immediately

set a new password. Follow the link, use as the default admin admin@localhost and as password the

copied value from the log.

- When logged into the account, click

EDITandCHANGE PASSWORDto set a new password - Log out of the account and try to log in to the admin ui with the new password

Custom rauthy admin user

It is a good idea, to either keep the admin@localhost and rename it (to never have a default

admin, which would be an attack vector) as a fallback user with just a very long password, or

disable it, after a custom admin has been added.

When logged in to the admin UI, you can add a new user. When the SMTP settings are correctly

configured in the config, which we can test right now, you will receive an E-Mail with the very

first password reset.

If you do not receive an E-Mail after the first user registration, chances are you may have a problem with the SMTP setup.

To debug this, you can set LOG_LEVEL=debug in the config and then watch the logs after a restart.

rauthy_admin user role

The role, which allows a user to access the admin UI, is the rauthy_admin.

If the user has this role assigned, he will be seen as an admin.

Under the hood, rauthy itself uses the OIDC roles and groups in the same way, as all clients would

do. This means you should not neither delete the rauthy default client, nor the rauthy_admin

role. There are mechanisms to prevent this from happening by accident via UI, but you could possibly

do this via a direct API call. \

There are some anti-lockout mechanisms in place in the backend, which will be executed with every

start, but being careful at this point is a good idea anyway.

Shutdown

The shutdown may confuse some people. For single instance deployments, it will only take a few seconds. For a HA cluster node though, it will usually end up somewhere in the range of ~15 seconds. Depending on the current cluster state, healthiness of remote nodes, a possibly ongoing leader election, and so on, it may even take up to 25 - 30 seconds to do a graceful shutdown. The reason behind this is that the shutdown logic tries to do it as smooth as possible, to e.g. never have requests in Kubernetes going to an already shutting down node.

This behavior is absolutely normal and expected! It does not hang or get stuck. Even though it can recover from it, you should never force-kill the process, as it will trigger an additional integrity check of WAL files and a full rebuild of the DB (when using Hiqlite) during the next startup, so that a consistent state can always be guaranteed.

Some container runtimes do force-kill automatically after 10 seconds. This is for instance the case

for podman, or for docker compose.

Make sure to adjust your shutdown timeout to at least 30 seconds.

Production Config

If you want to go into production, the configuration from the Getting Started section is most probably not enough.

The best thing you could do is just taking a look at the Reference Config and reading through all the possible options.

However, this section will give you a head start with the minimum you should set up. Depending on if you started with Docker or Kubernetes so far, some values might have been already set.

Minimal Production Config

This section gives you a template for probably the most minimal config you could do when going into production.

You can either copy & paste this (with adjustments of course) and expand it when necessary, or take a look at the complete Reference Config and check each existing value.

[bootstrap]

# If set, the email of the default admin will be changed during

# the initialization of an empty production database.

#

# default: 'admin@localhost'

# overwritten by: BOOTSTRAP_ADMIN_EMAIL

admin_email = "admin@localhost"

[cluster]

# Can be set to 'k8s' to try to split off the node id from the hostname

# when Hiqlite is running as a StatefulSet inside Kubernetes.

#

# default: unset

# overwritten by: HQL_NODE_ID_FROM

#node_id_from = "k8s"

# The node id must exist in the nodes and there must always be

# at least a node with ID 1

# Will be ignored if `node_id_from = k8s`

#

# At least `node_id_from` or `node_id` are required.

#

# default: 0 (invalid)

# overwritten by: HQL_NODE_ID

node_id = 1

# All cluster member nodes. For a single instance deployment,

# `"1 localhost:8100 localhost:8200"` will work just fine.

# Each array value must have the following format:

# `id addr_raft addr_api`

#

# default: ["1 localhost:8100 localhost:8200"]

# overwritten by: HQL_NODES

nodes = [

"1 localhost:8100 localhost:8200",

# "2 localhost:8101 localhost:8201",

# "3 localhost:8102 localhost:8202",

]

# Secrets for Raft internal authentication as well as for the API.

# These must be at least 16 characters long and you should provide

# different ones for both variables.

#

# default: not set - required

# overwritten by: HQL_SECRET_RAFT

secret_raft = "SuperSecureSecret1337"

# default: not set - required

# overwritten by: HQL_SECRET_API

secret_api = "SuperSecureSecret1337"

[email]

# Rauthy will force TLS and try a downgrade to STARTTLS, if

# TLS fails. It will never allow an unencrypted connection.

# You might want to set `SMTP_DANGER_INSECURE=true` if you

# need this for local dev.

#

# overwritten by: SMTP_URL

smtp_url = "localhost"

# optional, default will be used depending on TLS / STARTTLS

# overwritten by: SMTP_PORT

#smtp_port =

# overwritten by: SMTP_USERNAME

smtp_username = "username"

# overwritten by: SMTP_PASSWORD

smtp_password = "password"

# Format: "Rauthy <rauthy@localhost>"

# default: "Rauthy <rauthy@localhost>"

# overwritten by: SMTP_FROM

smtp_from = "Rauthy <rauthy@localhost>"

[encryption]

# You need to define at least one valid encryption key.

# These keys are used in various places, like for instance

# encrypting confidential client secrets in the database, or

# encryption cookies, and so on.

#

# The first part until the first `/` is the key ID.

# The ID must match '^[a-zA-Z0-9:_-]{2,20}$'

#

# The key itself begins after the first `/` has been found.

# The key must be exactly 32 bytes long, encoded as base64.

#

# You can find a more detailed explanation on how to generate

# keys in the documentation:

# 1. https://sebadob.github.io/rauthy/getting_started/k8s.html#create-and-apply-secrets

# 2. https://sebadob.github.io/rauthy/config/encryption.html

#

# You can provide multiple keys to make things like key

# rotation work. Be careful with removing old keys. Make sure

# that all secrets have been migrated beforehand.

# You can find a utility in the Admin UI to do this for you.

#

# overwritten by: ENC_KEYS - single String, \n separated values

keys = ["q6u26/M0NFQzhSSldCY01rckJNa1JYZ3g2NUFtSnNOVGdoU0E="]

# This identifies the key ID from the `ENC_KEYS` list, that

# should actively be used for new encryptions.

#

# overwritten by: ENC_KEY_ACTIVE

key_active = "q6u26"

[events]

# The E-Mail address event notifications should be sent to.

#

# overwritten by: EVENT_EMAIL

email = "admin@localhost"

[server]

# The scheme to use locally, valid values:

# http | https | http_https | unix_http | unix_https

# For more details about the UNIX domain socket, check out its

# documentation page.

#

# default: http_https

# overwritten by: LISTEN_SCHEME

scheme = "http"

# The Public URL of the whole deployment

# The LISTEN_SCHEME + PUB_URL must match the HTTP ORIGIN HEADER

# later on, which is especially important when running Rauthy

# behind a reverse proxy. In case of a non-standard port (80/443),

# you need to add the port to the PUB_URL

#

# default: not set - mandatory

# overwritten by: PUB_URL

pub_url = "localhost:8080"

# When rauthy is running behind a reverse proxy, set to true

#

# default: false

# overwritten by: PROXY_MODE

proxy_mode = false

# A list of trusted proxy CIDRs. When `proxy_mode = true`

# or `peer_ip_header_name` is set, these are mandatory to

# be able to extract the real client IP properly and safely

# to prevent IP header spoofing. All requests witha

# different source will be blocked.

#

# default: []

# overwritten by: TRUSTED_PROXIES - single String, \n separated values

#trusted_proxies = ['192.168.14.0/24']

[webauthn]

# The 'Relaying Party (RP) ID' - effective domain name.

#

# CAUTION: When this changes, already registered devices will

# stop working and users cannot log in anymore!

#

# default: 'localhost'

# overwritten by: RP_ID

rp_id = "localhost"

# Url containing the effective domain name.

#

# DEV: If you want to test Webauthn via the Svelte DEV UI,

# change the port number to :5173.

#

# !!! CAUTION: Must ALWAYS include the port number !!!

#

# default: 'http://localhost:8080'

# overwritten by: RP_ORIGIN

rp_origin = "http://localhost:5173"

TL;DR for generating a encryption.keys:

echo "$(openssl rand -hex 4)/$(openssl rand -base64 32)"Password Hashing Setup

Even though the options and tools in the Admin UI should be fully documented, I wanted to mention argon2id tuning here.

Rauthy uses the argon2id hashing algorithm for passwords. This is the most expensive and

compute-heavy operation done by the application, and the variables need to be tuned for every

deployment to provide the best compromise of security, resource usage and user experience.

The default values are a good start at the lower end of the "being safe scale" for a production

deployment. However, you should at least take a look at them and verify that they work for you.

The Admin UI provides a utility which helps you find the best values for your deployment quickly. What and how to do is described in the Admin UI itself. I just want to guide you to this utility, especially since it is an important step security wise.

When you are logged in to the Admin UI, please navigate to Config -> Argon2 Parameters to find

your values. After they have been found, apply them to the Rauthy config and restart the

deployment.

Keep in mind that if you run the application in a way, where memory is limited, for instance inside

Kubernetes with resource limits set too low, that it will crash, if either hashing.argon2_m_cost

is set too high or the memory limit too low.

There is one additional, really important config variable need to be taken into account for the

tuning.

Since this operation is really resource intense, you can limit the number of threads which can run

in parallel doing hashing operations. This is really important when we think about constrained

memory again.

hashing.max_hash_threads limits the maximum number of parallel password hashes at the exact same

time to never exceed system memory while still allowing a good amount of memory. The default value

is 2.

The rule is simple: Allow as many resources as possible for hashing to have the maximum amount of security, while restricting it as much as necessary.

For smaller deployments, set hashing.max_hash_threads, which will technically allows only one user

login at the exact same time. This value makes an external rate limiting for the login obsolete (

while you may add some for the others).

Passkeys / WebAuthn

Passkey Only Accounts

Rauthy provides the ability to create Passkey only accounts.

These accounts do not have any password at all. The user can login via E-Mail + providing the Passkey. Only keys

with additional user verification (UV) will be accepted for these accounts. This makes sure, that they are 2FA / MFA

secured (depending on the device) all the time.

You can choose the account type either during the initial password reset link you get via E-Mail, or you can convert a traditional password account to a passkey only account in your account view, if you have at least one Passkey with additional UV registered.

Passkey only accounts provide a few benefits:

- no need to remember or store any passwords

- way easier and faster logging in

- always 2FA / MFA

- strongest type of authentication

- no need to satisfy password policies

- no need to reset your password after it has been expired

Passkey only accounts cannot use the traditional password reset E-Mails.

This is a drawback and a benefit at the same time:

No way to take over an account if the E-Mail account has been compromised, but at the same time the user

relies on an Admin to reset the MFA devices, if no backup exists or all are lost.

Unfortunately, passkeys have one big drawback when you use the discoverable credentials / resident keys feature. This is really cool and the best possible UX at first glance, because the user does not need to provide even a username / email manually, but has one very big drawback.

The amount of resident keys a passkey can store is always limited.

There are devices, that can get locked up completely if you try to register resident keys beyond the capacity of the device itself. For this reason, Rauthy strictly discourages the use of resident keys and will always request the user to provide the email, so you can never end up in such a situation with Rauthy. Non-discoverable credentials are not limited per device.

Rauthy remembers the last email that has been used for a login on each machine. This provides the same best possible UX in the end that you would get with discoverable credentials but without the drawbacks of the limited amount.

Android has finally added support for biometric UV in September 2023.

This has made is possible to implement this feature into Rauthy without sacrificing security.

However, at the time of writing (2024/06/05), PIN / biometric UV via NFC keys like Yubikeys does still not work.

Sadly, Google just decided to not implement this feature for other keys than their own implementation of it.

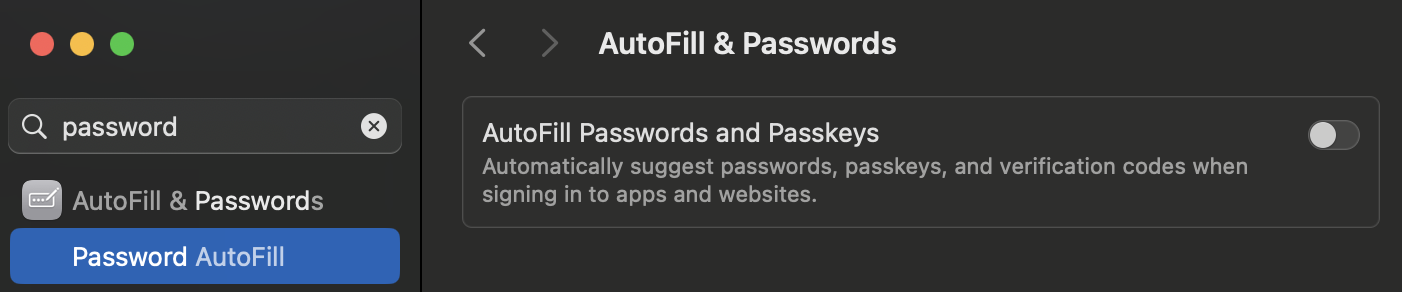

On macOS, when your browser tries to create a passkey, it will default to using the built-in Passwords app. If you wish to use a different password manager such as 1Password, Vaultwarden, etc., you'll need to disable the setting "Autofill Passwords and Passkeys".

If you want to register an Android device for a Passkey only account, but you are using for instance Yubikeys with PIN UV, you can do the following trick to get it done (works only with the latest Play store version):

- Create a password for your account, if it does not exist yet

- Remove all registered passkeys

- Log in to your account view on your Android device and another device that works with your Yubikey

- With both devices logged in at the same time:

- Register a new passkey with Android and choose "this device", which will create a Passkey flow with your fingerprint

- Register the Yubikey on the other device

- You should now have 2 Passkeys: Android + Yubikey

- Navigate to the Password page inside your account on any device and convert it to Passkey only

- You should now be able to log in on your Android device with Passkey only and with your Yubikey

Config

You should use Passkeys / Webauthn in production for 2FA / MFA. To make sure it works, you need to check your the config.

Adjust the following variables in your config, to make sure it works correctly.

RP_ID

This is the Relaying Party (RP) ID, which should be your effective domain name.

Let's say our application is available at auth.example.com, then this should be:

[webauthn]

rp_id = "auth.example.com"

When the RP_ID changes, already registered devices will stop working and users cannot log in anymore!

Be very careful, if you want / need to do this in production.

RP_ORIGIN

The second important variable is the webauthn.rp_origin. This needs to be set to the scheme + URL containing the

effective domain name + port.

The rp_origin must always include the port number, even if it is just the default 443 for HTTPS.

In this example, assuming Rauthy will be available at port 443, correct would be:

[webauthn]

rp_origin = https://auth.example.com:443

RP_NAME

This variable can be set to anything "pretty".

This may be shown to the user in a way like "rp_name requests your security key ...". If this is shown depends on the

OS and the browser the client uses. Firefox, for instance, does not show this at the time of writing.

You can change the rp_name later on without affecting the validation of already registered keys.

WEBAUTHN_RENEW_EXP

For all non Passkey only accounts, Rauthy will always prompt a user at least once for the password on a new machine, even with active passkeys. The keys are used either as a strong second factor, when they do not work with a PIN, or bump up the whole login to real MFA, if the OS / Browser / Key does support this.

When a user has logged in successfully on a new device and active 2FA / MFA, Rauthy will set an encrypted cookie as a

"remember me". The lifetime of this cookie can be configured with renew_exp. The default of this value is 2160

hours.

As long as this cookie is present and can be decrypted by the backend, the user can log in from this very device with the registered Passkey key only, which makes a very good user experience for the whole login flow. The E-Mail will already be filled automatically and only a single click on the login button is necessary.

Encryption

Rauthy does additional encryption for values in lots of places, like for instance client_secrets in the database

or session cookies. In the Getting Started for Kubernetes, we have set up the encryption.keys and

encryption.key_active.

The encryption.keys defines the static keys used for additional data encryption in a few places. This values may

contain multiple keys, if you want to rotate them at some point without breaking the decryption of all already existing

secrets.

encryption.key_active defines the key inside encryption.keys which will be used as the default. This means that all

new / current encryption's performed by the backend will use the key with the given ID.

Setup

If you followed the Getting Started for Kubernetes, you already completed this step. If not, this is how you can generate an encryption key.

echo "$(openssl rand -hex 4)/$(openssl rand -base64 32)"

The first part until the first / is the key ID. This has to be between 2 and 20 characters and should not contain any

special ones. The second part after the first / is the key itself. This value must be exactly 32 bytes long and

then be base64 encoded. If it is not, Rauthy will yell at startup and panic early.

If you have generated a key, lets say the output was

❯ echo "$(openssl rand -hex 4)/$(openssl rand -base64 32)"

90eb6d69/U9wZG4GS/94pVh6iTH1ijf+kj+tXJHKkQNsp5eImMQI=

Your config value should look like this:

[encryption]

keys = ["90eb6d69/U9wZG4GS/94pVh6iTH1ijf+kj+tXJHKkQNsp5eImMQI="]

key_active = "90eb6d69"

You can add more keys if you like, separated by new lines, which is needed for the key rotation described below.

The encryption.key_active will be the key being used for all new encryption's.

It seems that in some environments, the above openssl command does not output proper values, which will make Rauthy

panic on startup, when it checks the given values. If you run into that situation, you can generate them without

openssl as well, with e.g:

echo"$(cat /dev/urandom | tr -dc 'a-zA-Z0-9' | head -c 6)/$(cat /dev/urandom | head -c 32 | base64)"Key Rotation

A good practice is to rotate encryption keys from time to time. Rauthy does auto-rotate the keys for signing tokens, but the admin is responsible for the encryption keys, since they have a greater impact.

1. Add a new key to the encryption.keys in you secrets

You must not remove a current key, before the migration has been done via the UI.

If the old key is gone, the migration will fail.

2. Generate a new key + id

echo "$(openssl rand -hex 4)/$(openssl rand -base64 32)"

The part before the first / is the key_active and the part afterwards is the key base64 encoded.

You might set the new encryption.key_active to the newly generated key ID.

Keep in mind, you need to ADD this to your existing keys and not just replace them! If you just replace them, almost all things will break and fall apart.

The final format of the encryption.keys should look something like this, for instance:

[encryption]

key = [

"Wx1zrbLF/5vTaB7LdUSg1aTecmqHJOu2+RnU6zgTwNkDQU52Y3JM=",

"6uf5QebA/9DsKMoq8A+Gn2WQrTcSpz5sg751yYs3IJlkw3dn0rII=",

]

In this example, if the first key should be the new active default, set your key_active to

[encryption]

key_active = "Wx1zrbLF"

3. Set the key_active to the ID of your newly generated key

This will make sure, that all new encryptions will use the new key. If you do not care about removing the old keys,

because you maybe just want to rotate because its good practice, the secrets will migrate "by themselves" over time.

If Rauthy finds any secrets during its normal operation, that have been encrypted with an older key than the current

key_active, it will re-encrypt these secrets and update the values.

This means, you may just stop at this point, if this is good enough for you.

4. Migrate Keys

If you however want to trigger a re-encryption of all existing secrets on purpose, there is a small tool in the Admin UI which helps you with this.

Log in to the Admin UI and navigate to Config -> Encryption Keys.

You will see the currently recognized keys and the currently active ID.

You can then make sure, that the ID you want to migrate secrets to is selected and execute the migrations. Please keep in mind, that if you have a lot of data, it might take a few seconds to perform this operation. This will migrate all encrypted data for existing OIDC clients, all JWKs, and so on, with the new key.

5. Remove old keys

After a successful migration via the UI tool, you could remove old keys from the keys value, but it is not

recommended as long as you did not have a known data breach. Just keep them around for some time because of

encrypted cookies with older keys.

All cookies are encrypted with the key_active. This means, if you remove something from the keys which has

been used to encrypt cookies, the user will be prompted to log in again, even if cookies have not expired yet.

Rauthy uses ChaCha20Poly1305 for any encryption. AES GCM is not used on purpose, because it has some attack vectors if its used without hardware acceleration.

Usually, devices these days all come with dedicated AES acceleration, even embedded ones. However, with VM's this is often a different story and its not guaranteed, that you will have AES acceleration when you spin up a VM in some cloud. Rauthy tries to be as secure as possible by default and therefore ChaCha20Poly1305 has a slight advantage over AES.

Logging and Auditing

Rauthy logs most things into the console, depending on the configuration of different log levels. In

addition, more

important events can be sent to Matrix, Slack or via E-Mail. All of this is highly configurable and

you should be able

to achieve whatever you need. All events are logged into the console as well with their configured

level. This means,

if Rauthy fires an event of type NewUser with the level info and you have configured a log level

of at least the

same, you will see the event in the console as well. So you could only use log aggregation and use

existing tools

without configuring other targets and still catch everything.

Logging

You can configure not only different levels for logging, but also different targets / situations.

LOG_LEVEL

The LOG_LEVEL variable configures the default logging in most situations. This defines the logging

for instance

for logging information from different function runs or things that have been triggered.

[logging]

# This is the log level for stdout logs

# Accepts: error, warn, info, debug, trace

#

# default: 'info'

# overwritten by: LOG_LEVEL

level = 'info'

LOG_LEVEL_DATABASE

The Hiqlite database logging is at the time of writing pretty verbose on purpose. The whole persistence layer with the Raft cluster setup has been written from the ground up. The amount of logging will be reduced in later versions, when the whole layer has been proven to be really solid, but for now you get more information just in case you need to debug something.

You can reduce the default logging and for instance set it to warn or error only.

[logging]

# The log level for the `Hiqlite` persistence layer.

# At the time of writing, only the cache will use `hiqlite`

#

# default: info

# overwritten by: LOG_LEVEL_DATABASE

level_database = 'info'

LOG_LEVEL_ACCESS

For changing the logging behavior for access logs to the API endpoints, you will need to set the

LOG_LEVEL_ACCESS.

If you have access logging configured at your firewall or reverse proxy, you can disable the

LOG_LEVEL_ACCESS fully

to reduce duplicated log outputs.

[logging]

# This is a special config which allows the configuration of

# customized access logs. These logs will be logged with each

# request in addition to the normal LOG_LEVEL logs.

# The following values are valid:

# - `debug`

# CAUTION: The Debug setting logs every information available

# to the middleware which includes SENSITIVE HEADERS

# DO NOT use the Debug level in a working production environment!

# - `verbose`

# Verbose logging without headers - generates huge outputs

# - `basic`

# Logs access to all endpoints apart from the Frontend ones which

# all js, css, ...

# - `modifying`

# Logs only requests to modifying endpoints and skips all GET

# - `off`

#

# default: 'modifying'

# overwritten by: LOG_LEVEL_ACCESS

level_access = 'modifying'

LOG_FMT

Rauthy can output logs as JSON data with the following variable:

[logging]

# You can change the log output format to JSON, if you set:

# `log_fmt=json`.

# Keep in mind, that some logs will include escaped values,

# for instance when `Text` already logs a JSON in debug level.

# Some other logs like an Event for instance will be formatted

# as Text anyway. If you need to auto-parse events, please consider

# using an API token and listen ot them actively.

#

# default: text

# overwritten by: LOG_FMT

log_fmt = 'json'

Events

Events are used for auditing and never miss anything. If something important happens, you usually need to inspect logs to catch it, but why should you, if you did not notice any problems? This is where Rauthy Events are helping you out. You need to set up basic configuration for Event targets and then you could customize the different levels.

Basic Setup

Rauthy has the following Event targets built-in:

- Matrix

- Slack

You can see the full set of config option in the [events] in the Reference Config.

To be able to receive Events via E-Mail, you need to have set up an SMTP server and have a working

connection. With

a working SMTP, you only need to set events.email, that's it.

[events]

# The E-Mail address event notifications should be sent to.

#

# overwritten by: EVENT_EMAIL

email = 'admin@localhost'

Matrix

Matrix is often deployed with home servers that may not even have real TLS certificates or if just running behind closed doors, may use self-signed certificates. To make it work in all of these situations, you can configure quite a lot for the connection to Matrix. In the end, you will only need to have some credentials and a room ID, so Rauthy knows where it should post the events.

[events]

# Matrix variables for event notifications.

# `matrix_user_id` and `matrix_room_id` are mandatory.

# Depending on your Matrix setup, additionally one of

# `matrix_access_token` or `matrix_user_password` is needed.

#

# If you log in to Matrix with User + Password, you may use

# `matrix_user_password`. If you log in via OIDC SSO (or just

# want to use a session token you can revoke), you should

# provide `matrix_access_token`.

# If both are given, the `matrix_access_token` will be preferred.

#

# If left empty, no messages will not be sent to Matrix.

# Format: `@<user_id>:<server address>`

#

# overwritten by: EVENT_MATRIX_USER_ID

matrix_user_id = ''

# Format: `!<random string>:<server address>`

# overwritten by: EVENT_MATRIX_ROOM_ID

matrix_room_id = ''

# overwritten by: EVENT_MATRIX_ACCESS_TOKEN

matrix_access_token = ''

# overwritten by: EVENT_MATRIX_USER_PASSWORD

matrix_user_password = ''

# URL of your Matrix server.

# default: https://matrix.org

# overwritten by: EVENT_MATRIX_SERVER_URL

matrix_server_url = 'https://matrix.org'

# Optional path to a PEM Root CA certificate file for the

# Matrix client.

#

# overwritten by: EVENT_MATRIX_ROOT_CA_PATH

matrix_root_ca_path = 'tls/root.cert.pem'

# May be set to disable the TLS validation for the Matrix

# client.

#

# default: false

# overwritten by: EVENT_MATRIX_DANGER_DISABLE_TLS_VALIDATION

matrix_danger_disable_tls_validation = false

# The default behavior is, that Rauthy will panic at startup

# if it cannot connect to a configured Matrix server. The

# reason is that event notifications cannot be dropped silently.

#

# However, if you use a self-hosted Matrix server which uses

# Rauthy as its OIDC provider and both instances went offline,

# you will have a chicken-and-egg problem:

# - Rauthy cannot connect to Matrix and will panic

# - Your Matrix server cannot connect to Rauthy and will panic

# To solve this issue, you can temporarily set this value to

# 'true' and revert back, after the system is online again.

#

# default: false

# overwritten by: EVENT_MATRIX_ERROR_NO_PANIC

matrix_error_no_panic = false

I suggest that you create a separate room for these events. If you experience issues with Matrix encryption, you should maybe disbale the encryption for the Events room. I came across some weird errors from Matrix in the past when I was using a session token which has been created on another machine.

Slack

To receive messages via Slack, you need to create a legacy webhook inside your Slack account. This is then the only config variable you need to set:

[events]

# The Webhook for Slack Notifications.

# If left empty, no messages will be sent to Slack.

#

# overwritten by: EVENT_SLACK_WEBHOOK

slack_webhook = ""

Custom Target

If you need your events to be sent somewhere custom, you can always create an API key with read

access for Events.

Then write a small app that simply listens to the events stream, which can process or just forwards

the events to where

ever you need them.

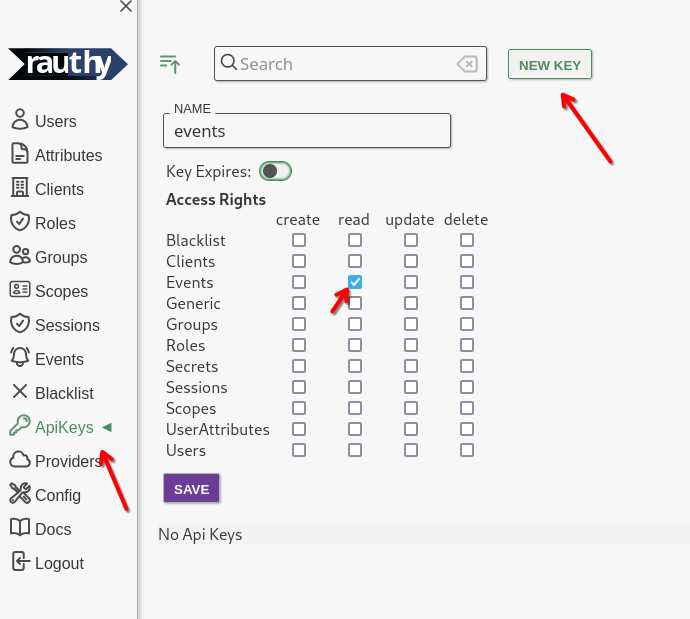

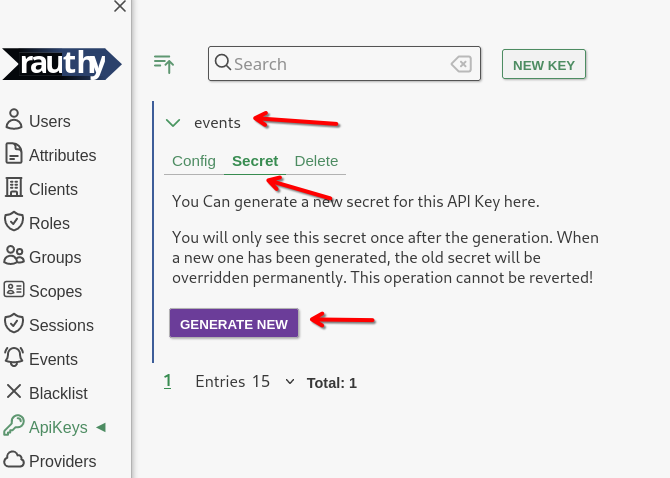

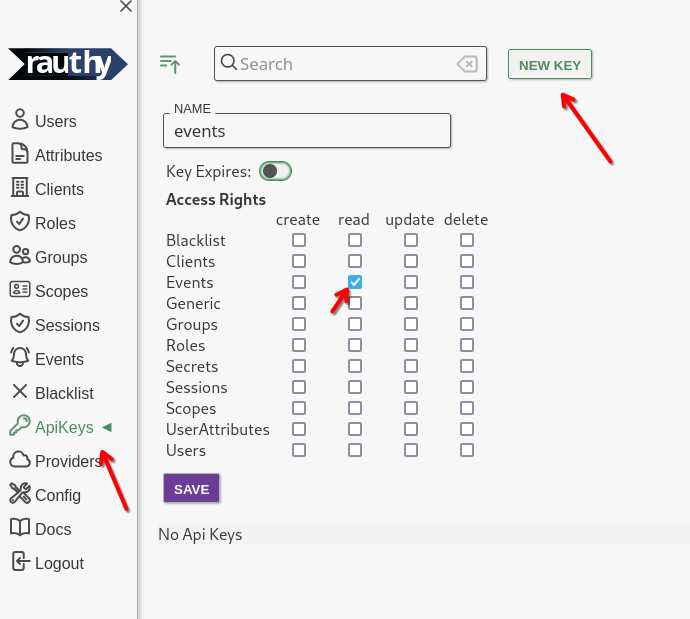

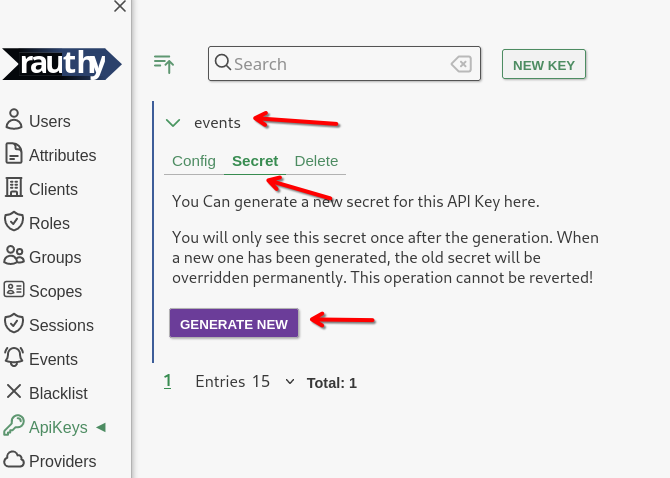

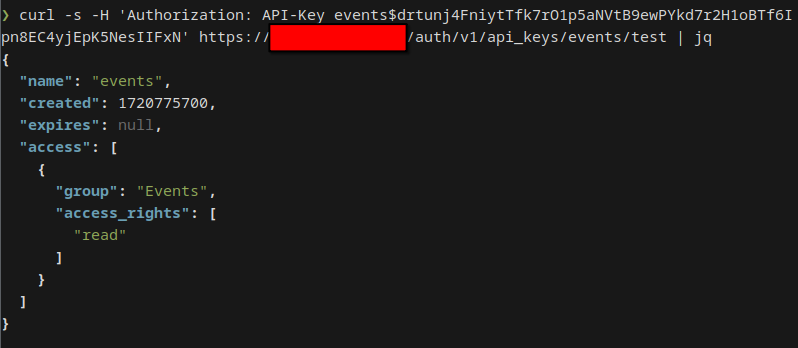

- Log in to the Admin UI and create an API key with

readaccess for events.

- Save the key, expand the new entry, navigate to

SecretandGenerate New

- Copy the new Secret from the hidden input that will appear.

You will also see 2 prepared

curlstatements to test your new API key. From the test, you should get an output similar to this:

{

"name": "events",

"created": 1720428190,

"expires": null,

"access": [

{

"group": "Events",

"access_rights": [

"read"

]

}

]

}

- Listen to the events stream.

You need to add the API key in the

Authorizationheader with the prefixAPI-Key, for instance like

Authorization: API-Key events$SUcpBxcPmfwH9z1Kb4ExOUYDSXpxOj9mFLadjuQ1049XaWzdWB328aa97k2nj21E

You can either periodically fetch via the /auth/v1/events endpoint (see Swagger documentation in

the Admin UI), or

by listening to the /auth/v1/events/stream, which will be a Server Sent Events stream.

The events will be sent in JSON format and have the following content:

#![allow(unused)] fn main() { struct Event { pub id: String, pub timestamp: i64, pub level: EventLevel, pub typ: EventType, pub ip: Option<String>, pub data: Option<i64>, pub text: Option<String>, } // the `EventLevel`s will be converted to lower case enum EventLevel { Info, Notice, Warning, Critical, } enum EventType { InvalidLogins, IpBlacklisted, IpBlacklistRemoved, JwksRotated, NewUserRegistered, NewRauthyAdmin, NewRauthyVersion, PossibleBruteForce, RauthyStarted, RauthyHealthy, RauthyUnhealthy, SecretsMigrated, UserEmailChange, UserPasswordReset, Test, BackchannelLogoutFailed, ScimTaskFailed, ForcedLogout, UserLoginRevoke, SuspiciousApiScan, LoginNewLocation, TokenIssued, } }

Keep in mind, that depending on proxies you might have in between, they might kill your connection to the events stream after some timeout without messages. Your app should work around this and either re-connect on connection loss, or you could fix it with a different proxy config or keep alive messages.

Testing

You can test your Event handler / pipeline setup when you log in to the Admin UI and simply press

the Test button

in the upper right corner in the Events sidebar. This will send a Test event to all configured

targets and listeners

independent of any configured event level restrictions. Test events will always be sent as long as

everything is working

properly.

EVENT_NOTIFY_LEVEL

You can set different levels for each target. By default, Only events with warning or higher are

sent via E-Mail

while Matrix / Slack would receive Events with notice or higher. If you want a different behavior,

you can get this:

[events]

# The notification level for events. Works the same way as

# a logging level. For instance: 'notice' means send out a

# notifications for all events with the notice level or higher.

# Possible values:

# - info

# - notice

# - warning

# - critical

#

# default: 'warning'

# overwritten by: EVENT_NOTIFY_LEVEL_EMAIL

notify_level_email = 'warning'

# default: 'notice'

# overwritten by: EVENT_NOTIFY_LEVEL_MATRIX

notify_level_matrix = 'notice'

# default: 'notice'

# overwritten by: EVENT_NOTIFY_LEVEL_SLACK

notify_level_slack = 'notice'

Event Persistence

Rauthys Admin UI has a component for inspecting Events from the past for analytical purposes. By

default, events with

the level info or higher are persisted for 31 days. After this period, they will be removed from

the database to

keep it clean.

You can configure both the level which should be persisted, for instance set "only persist Events with level warning or higher" and the days how long they should be kept.

[events]

# Define the level from which on events should be persisted

# inside the database. All events with a lower level will be

# lost, if there is no active event subscriber.

# Possible values:

# - info

# - notice

# - warning

# - critical

#

# default: 'info'

# overwritten by: EVENT_PERSIST_LEVEL

persist_level = 'info'

# Define the number of days when events should be cleaned

# up from the database.

#

# default: 30

# overwritten by: EVENT_CLEANUP_DAYS

cleanup_days = 30

level_* Values

There are a lot of values starting with level_*. These can be used to configure the level for

different kinds

of event being fired by Rauthy.

For instance, let's say you only want to receive events with a level of warning or higher, but you

also want to

receive a notification when there are more than 7 failed logins from an IP. By default, 7 failed

logins would trigger an

event with the level of notice. You can then set

[events]

# default: notice

# overwritten by: EVENT_LEVEL_FAILED_LOGINS_7

level_failed_logins_7 = 'notice'

to also receive these while still only receiving warning events.

The full list of these configurable levels is the following:

[events]

# The level for the generated Event after a backchannel

# logout has failed after exceeding all retries.

#

# default: critical

# overwritten by: EVENT_LEVEL_BACKCHANNEL_LOGOUT_FAILED

level_backchannel_logout_failed = 'critical'

# The level for the generated Event after a user was

# force-logged-out by an admin.

#

# default: notice

# overwritten by: EVENT_LEVEL_FORCE_LOGOUT

level_force_logout = 'notice'

# The level for the generated Event after an IP has

# been blacklisted

#

# default: warning

# overwritten by: EVENT_LEVEL_IP_BLACKLISTED

level_ip_blacklisted = 'warning'

# The level for the generated Event after the JWKS has

# been rotated

#

# default: notice

# overwritten by: EVENT_LEVEL_JWKS_ROTATE

level_jwks_rotate = 'notice'

# The level for the generated Event after a login from

# a new location for a user.

#

# default: notice

# overwritten by: EVENT_LEVEL_NEW_LOGIN_LOCATION

level_new_login_location = 'notice'

# The level for the generated Event after a new user has

# been registered.

#

# default: info

# overwritten by: EVENT_LEVEL_NEW_USER

level_new_user = 'info'

# The level for the generated Event after a user has

# changed his E-Mail

#

# default: notice

# overwritten by: EVENT_LEVEL_USER_EMAIL_CHANGE

level_user_email_change = 'notice'

# The level for the generated Event after a user has

# reset its password

#

# default: notice

# overwritten by: EVENT_LEVEL_USER_PASSWORD_RESET

level_user_password_reset = 'notice'

# The level for the generated Event after a user has

# been given the 'rauthy_admin' role

#

# default: notice

# overwritten by: EVENT_LEVEL_RAUTHY_ADMIN

level_rauthy_admin = 'notice'

# The level for the generated Event after a Rauthy

# entered a healthy state (again)

#

# default: notice

# overwritten by: EVENT_LEVEL_RAUTHY_HEALTHY

level_rauthy_healthy = 'notice'

# The level for the generated Event after a Rauthy

# instance has been started

#

# default: info

# overwritten by: EVENT_LEVEL_RAUTHY_START

level_rauthy_start = 'info'

# The level for the generated Event after a Rauthy

# entered an unhealthy state

#

# default: critical

# overwritten by: EVENT_LEVEL_RAUTHY_UNHEALTHY

level_rauthy_unhealthy = 'critical'

# The level for the generated Event after a new App

# version has been found

#

# default: notice

# overwritten by: EVENT_LEVEL_RAUTHY_VERSION

level_rauthy_version = 'notice'

# The level for the generated Event after DB secrets

# have been migrated to a new key

#

# default: notice

# overwritten by: EVENT_LEVEL_SECRETS_MIGRATED

level_secrets_migrated = 'notice'

# The level for the generated Event after a user revoked

# an illegal login with the link from the notification

# E-Mail for "login from unknown location"

#

# default: warning

# overwritten by: EVENT_LEVEL_USER_LOGIN_REVOKE

level_user_login_revoke = 'warning'

# The level for the generated Event after a SCIM

# update task ultimately failed after exceeding

# all retries.

#

# default: critical

# overwritten by: EVENT_LEVEL_SCIM_TASK_FAILED

level_scim_task_failed = 'critical'

# The level for the generated Event after Rauthy

# detected a suspicious API scanning request.

#

# default: notice

# overwritten by: EVENT_LEVEL_SUSPICIOUS_REQUEST

level_suspicious_request = 'notice'

# The level for the generated Event after a new JWT

# Token was issued.

#

# default: info

# overwritten by: EVENT_LEVEL_TOKEN_ISSUED

level_token_issued = 'info'

# The level for the generated Event after certain

# amounts of false logins from an IP

#

# default: critical

# overwritten by: EVENT_LEVEL_FAILED_LOGINS_25

level_failed_logins_25 = 'critical'

# default: critical

# overwritten by: EVENT_LEVEL_FAILED_LOGINS_20

level_failed_logins_20 = 'critical'

# default: warning

# overwritten by: EVENT_LEVEL_FAILED_LOGINS_15

level_failed_logins_15 = 'warning'

# default: warning

# overwritten by: EVENT_LEVEL_FAILED_LOGINS_10

level_failed_logins_10 = 'warning'

# default: notice

# overwritten by: EVENT_LEVEL_FAILED_LOGINS_7

level_failed_logins_7 = 'notice'

# default: info

# overwritten by: EVENT_LEVEL_FAILED_LOGIN

level_failed_login = 'info'

Backups

Postgres

If you are using Postgres as the main database, Rauthy does not do any backups.

There are a lot of way better tools out there to handle this task.

Hiqlite

If Rauthy is using Hiqlite, it does automatic backups, which can be configured with:

[cluster]

# When the auto-backup task should run.

# Accepts cron syntax:

# "sec min hour day_of_month month day_of_week year"

#

# default: "0 30 2 * * * *"

# overwritten by: HQL_BACKUP_CRON

backup_cron = "0 30 2 * * * *"

# Backups older than the configured days will be cleaned up on S3

# after the backup cron job `backup_cron`.

#

# default: 30

# overwritten by: HQL_BACKUP_KEEP_DAYS

backup_keep_days = 30

# Backups older than the configured days will be cleaned up locally

# after each `Client::backup()` and the cron job `HQL_BACKUP_CRON`.

#

# default: 3

# overwritten by: HQL_BACKUP_KEEP_DAYS_LOCAL

backup_keep_days_local = 3

All these backups are written inside the pod / container into data/state_machine/backups.

This difference makes it possible, that you could add a second volume mount to the container.

You then have the database itself on a different disk than the backups, which is the most simple and

straight forward

approach to have a basic backup strategy. However, it is recommended to use S3 for backups,

especially for HA

deployments.

Remote Backups to S3 Storage

Hiqlite backups can be pushed to an S3 bucket after creation. This way, you can keep only very low amount of local backups and older ones on cheaper object storage.

Rauthy has been tested against MinIO and Garage S3 storage and is working fine with both, so I

expect and standard S3

API to just work out of the box. You need to provide an Access Key + Secret with write access to an

existing bucket

and Rauthy will take care of the rest. All backups pushed to S3 will automatically encrypted with

the currently active

encryption.key_active from the Rauthy config.

The configuration is done with the following values:

[cluster]

# Access values for the S3 bucket where backups will be pushed to.

# overwritten by: HQL_S3_URL

s3_url = "https://s3.example.com"

# overwritten by: HQL_S3_BUCKET

s3_bucket = "my_bucket"

# overwritten by: HQL_S3_REGION

s3_region = "my_region"

# overwritten by: HQL_S3_PATH_STYLE

s3_path_style = true

# overwritten by: HQL_S3_KEY

s3_key = "my_key"

# overwritten by: HQL_S3_SECRET

s3_secret = "my_secret"

Disaster Recovery

If you really lost all your data, you can easily restore automatically from the latest backup. This

works with either a

local file backup or with an encrypted remote backup on s3 storage (as long as you still have

the ENC_KEY_ACTIVE

that has been used for the remote backup).

This, again, works only for Hiqlite. When you are using Postgres, you should use Postgres native

tooling like

pgBackRest which is way better at this.

The process is really simple:

- Have the cluster shut down. This is probably the case anyway, if you need to restore from a backup.

- Provide a backup file name on S3 storage with the

HQL_BACKUP_RESTOREENV value with prefixs3:object_name(encrypted), or a file on disk (plain sqlite file) with the prefixfile:/path/to/backup. - Start up Rauthy

- Check the logs and wait for the backup to be finished

- After a successful restore, Rauthy will start its normal operation

- Make sure to remove the

HQL_BACKUP_RESTOREenv value.

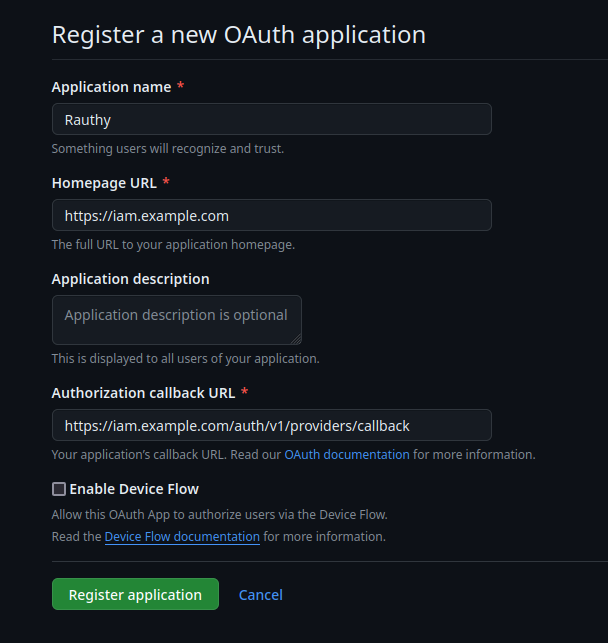

After a successful restore, you MUST remove the env var again!